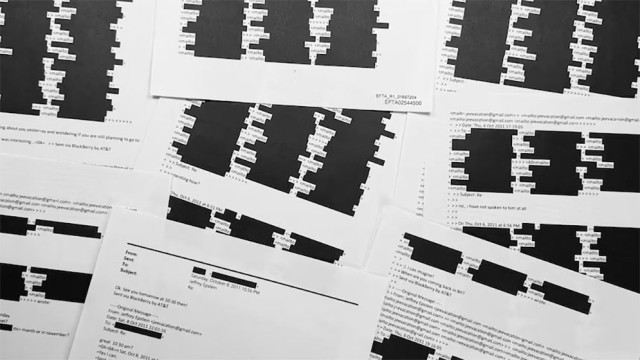

As fraudulent activities utilizing artificial intelligence (AI) technology, including the creation of fake voices, images, and videos, continue to rise, OpenAI, the creator of ChatGPT, has stepped up with a solution. OpenAI has developed a deepfake detector tool aimed at identifying fake images generated through AI, particularly those created by the 'dall-e' image generator.

The new tool, designed to detect artificial images produced by the Dall-e 3 model, has been put through rigorous testing by researchers. According to OpenAI, the DeepFake Detector boasts an impressive accuracy rate of 98.8% in identifying images generated by the Dall-e 3 model. However, it currently does not extend to detecting images created by other AI image generators like Midjourney and Stability.

Acknowledging the limitations, Sandhini Aggarwal, a researcher at OpenAI, emphasized the company's commitment to ongoing research and improvement of the tool to address the evolving challenges posed by deepfake technology. Aggarwal stated, "There is no effective way to combat deepfake images or videos. We are starting new research to enhance our tool's capabilities."

In addition to developing the deepfake detector, OpenAI is collaborating with various organizations, non-profits, and research institutes to tackle the deepfake problem comprehensively. Moreover, industry giants like Google and Meta have joined forces by participating in the Coalition for Content Provenance and Authenticity committee. This collaborative effort aims to combat the proliferation of fake digital content online and ensure authenticity and credibility in digital media.

Comment: