AI companies, including OpenAI, are pivoting from the traditional “bigger is better” approach to developing large language models by exploring new techniques that simulate human-like thinking. These advancements, such as the "o1" model by OpenAI, could reshape the AI arms race and have broader implications for the types of resources required, including energy and hardware.

Ilya Sutskever, co-founder of OpenAI, recently emphasized the limits of scaling up models through more data and computing power. He advocates for a more refined approach to scaling, moving beyond simply adding resources. This shift is driven by the increasing costs, hardware failures, and data shortages encountered in large-scale model training.

To address these issues, AI labs are turning to "test-time compute," which enhances models during their use by allowing them to evaluate multiple possibilities in real-time, much like human reasoning. This technique has shown significant improvements in performance, reducing the need for massive scaling. OpenAI’s new model, o1, integrates this method, allowing it to solve problems in a multi-step, human-like manner.

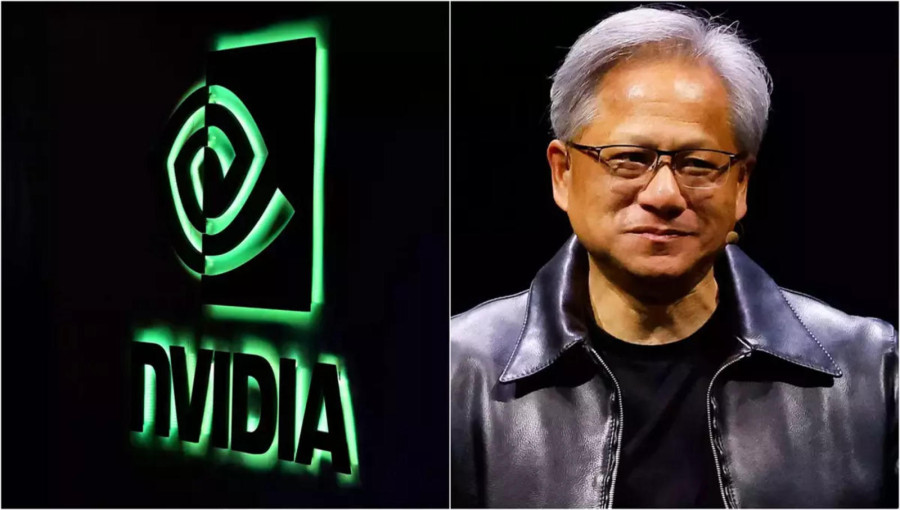

The rise of such methods could impact the competitive landscape, especially in the AI hardware market, which has been dominated by Nvidia’s chips. As the industry moves towards inference-based computing, Nvidia might face competition, as companies shift towards using distributed cloud servers for inference rather than investing heavily in pre-training infrastructure. This transition is prompting venture capitalists to reconsider their investments, as the demand for Nvidia’s chips could evolve with these new techniques.

Comment: