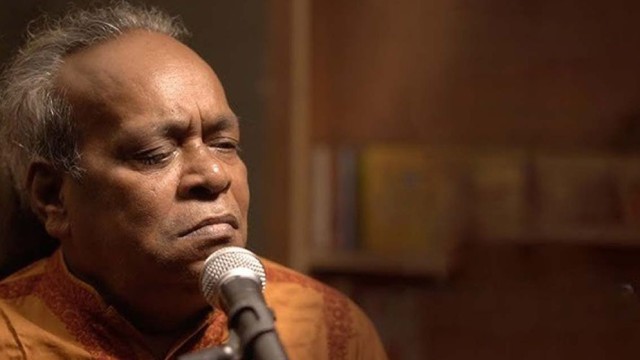

Recently, deepfakes of a number of international female superstars, such as Priyanka Chopra, Alia Bhatt, Rashmika Mnadana, Natalie Portman, and Emma Watson, have gone popular. A deepfake film purporting to be of actress Tanjin Tisha has emerged just as people are starting to worry about these videos.

This is allegedly the first deepfake video featuring a celebrity from Bangladesh. In November of last year, a number of Facebook pages started sharing the fictitious video. Then, in December, it became "viral."

What is deepfake: Deepfake technology allows for the precise replication of a person's voice or visage. With the use of this technology, offensive films can be made into phony ones by changing someone's face. "Deepfake videos" are what these kinds of fake videos are called. It can be difficult for regular folks to tell this kind of fake videos apart.

How dangerous deepfake can be in Bangladesh

Experts argue that the emergence of artificial intelligence has made the deepfake technique more easier. Beyond Bangladesh, the world is witnessing a startling rise in the inappropriate use of deepfake technology. Deepfake technology is currently available for free or at a very low cost.

By substituting any person's face for the video, cybercriminals can disseminate offensive content. These kinds of phony videos are produced with great detail using deepfake technology. As such, it's difficult for the average person to recognize them.

Professor Clare McGlynn of Durham University in the United Kingdom made the following statement on Sunday afternoon: "Deepfake harassment incidents are happening all over the world." Its boundaries are not geographical. Any woman, anywhere in the world, is susceptible to it. Such instances involving South Asian leaders and celebrities are common.

For entertainment, a lot of people in Bangladesh watch TikTok videos, Facebook reels, and YouTube shorts. The phony videos that are becoming viral on social sites are also widely believed in.

Professor Clare McGlynn has been researching cyberbullying and sexual violence at Durham University in the United Kingdom.

Any woman, anywhere in the world, is susceptible to it. Such occurrences involving South Asian politicians and celebrities are not uncommon.

This IT expert thinks the challenge will get even bigger because deepfake technology has lately been considerably more accessible.

He worries that deepfake may be widely abused in the future to deceive people, degrade people, incite personal animosity, or target politicians or celebrities.

The prevalence of these phony videos has drawn attention from all across the world. Qadaruddin Shishir, the Fact Check editor (Bangladesh) for AFP, thinks that in the context of Bangladesh, this is an even "bigger concern."

"The problem is even more acute, particularly in Bangladesh where the majority of people have limited or no technological literacy," he said last Thursday. Deepfake content has the potential to easily mislead people.

Deepfake films of a number of lawmakers, including Nipun Roy and BNP leader Rumin Farhana, surfaced in August of last year. Dismislab then refuted them as being phony videos. According to Qadaruddin Shishir, the main purpose of deepfake videos is to degrade people in society or in the political sphere.

The deepfake technology is highly dangerous for Bangladesh. The reason is that many don’t contemplate enough to differentiate between original and fake videos on social media. That’s why deepfake is a more challenging issue in Bangladesh.

Suman Ahmed believes that the concept of deepfake videos have spread throughout the world in the last five to six years. Cases of deepfake videos have been increasing in Bangladesh for about a year. But no specific statistics have been found about since when deepfake videos are being made here.

Earlier, fake videos of several Dhallywood stars including Mahiya Mahi, Pori Moni and Mehzabien Chowdhury have gone viral on social media. However, none of those were deepfakes. Cyber criminals had made those fake videos through manual editing.

Qadaruddin Shishir said, “The level of manipulation was limited in the manually edited videos from before. The artificial intelligence has erased that line. Now it’s possible to make more credible fake videos in way less time and cost than before.”

A report of research organisation Sensity AI published in 2019, stated that 99 per cent of the deepfake videos created worldwide are of women after all. And as much as 96 per cent of these deepfake videos have been made without their consent.

Natalie Portman, Priyanka Chopra and Rashmika Mandana. Several stars including them have fallen victim to deepfake.

Why women are the target

Starting from Natalie Portman, Emma Watson of Hollywood, Priyanka Chopra, Alia Bhatt, Rashmika Mandana of Bollywood to Tanjin Tisha of Dhallywood, majority of the deepfake victims across the world consists of women indeed.

Quite a few documentaries including ‘My Blonde GF’ and ‘Another Body’ based on deepfake pornography have shown the horrific experiences women had to go through.

A report of research organisation Sensity AI published in 2019, stated that 99 per cent of the deepfake videos created worldwide are of women after all. And as much as 96 per cent of these deepfake videos have been made without their consent.

Professor Clare McGlynn has long been working on sexual violence and cyber bullying. She told , “Deepfake pornography is being used as a weapon to harass women. The pornography industry is designed for men basically. As a result, women have been turned into the target of deepfake.”

As the reason for targeting women Clare McGlynn mentioned, “Society does not have a good record of taking crimes against women seriously, and this is also the case with deepfake porn. Online abuse is too often minimised and trivialised.”

The deepfake videos of female celebrities are ‘sellable’. Some make deepfake sexual contents of those celebrities to make money online.

Fact checker Qadaruddin Shishir

Women have been noticed to become victims of deepfake in every corner of the world whether it’s in the United States, in Europe or in Bangladesh.

Dhaka-based fact checker Qadaruddin Shishir said that the deepfake videos of female celebrities are ‘sellable’. Some make deepfake sexual contents of those celebrities to make money online. Apart from that, there could be personal issues as well. Plus, he believes that many people make deepfake videos also out of revenge.

Professor of women and gender studies at Dhaka University Tania Haque told last Thursday, “We still don’t perceive women as human beings rather they are considered as commodities. Women are just seen from a sexual perspective.”

If deepfake victims submit complaints, there’s scope to take measures under this act.

Demand to take measures

Amidst the global concern about misuse of deepfake, mega platforms like YouTube and Facebook have started taking it seriously. They have updated their policies. Meanwhile in India, the government has conducted raids to prevent deepfake.

Actress Runa Khan has demanded punishment for those who make and circulate this type of deepfake videos in Bangladesh.

It’s a punishable crime to create and publish objectionable photographs or videos of someone without permission under the Cyber Security Act, 2023. If deepfake victims submit complaints, there’s a ground to take measures under this act.

We still don’t perceive women as human beings rather they are considered as commodities. Women are just seen from a sexual perspective.

Tania Haque, professor, Women and Gender Studies Department, Dhaka University

People can complain at the Cyber Police Center of the Criminal Investigation Department (CID), Cyber Crime Investigation Department of DMP and the complaint centre of Police Cyber Support for Women.

Additional DIG at CID’s Cyber Police Centre Rezaul Masud told last Thursday that they haven’t yet received any complaint about deepfake. Measures will be taken if there’s any complaint. Cyber criminals are identified at CID’s digital forensic lab and it’s capable of finding the criminals behind deepfake as well, added he.

Deepfake videos are normally a few seconds long.

How to tell deepfake videos apart

- In many cases there are some anomalies in the deepfake videos. Sometimes, the body language of the person is abnormal. Sometimes the speed of speech delivery is also unusual. The facial expressions don’t match the speech. That’s why most of the time, cunningly, deepfake videos don’t have voices.

- Usually more attention is paid to replicate the person’s facial image. So, there remain various mismatches with the other visible parts of the body. Their clothing or face can also be inconsistent with the bodily movement.

- Deepfake videos are normally a few seconds long. That’s because it’s an expensive affair to create long deepfake videos.

- In this sort of videos, there can be incoherence in the shape and build of different body parts of the person such as lips, eyes, ears, nose, hair or the movements of the eyelids. In some cases, sources can be found about some deepfake videos on the internet from searching reverse image.

Comment: